January 22, 2026

AI in Legal Practice

Amy Swaner

AI transcription tools provide genuine productivity benefits, but those benefits must be pursued within the constraints that wiretap, privacy, and biometric laws impose. The litigation examined in Parts 1 and 2 demonstrates that liability turns not on whether recording occurs, but on vendor capability and economic incentives to reuse conversational data.

AI transcription creates legal risk when vendors extract, retain, and reuse conversational data. This article provides actionable guidance for each party involved in virtual meetings with AI note-takers and for lawyers using or advising on these tools.

The Five Parties in Every AI-Recorded Meeting

Regardless of how many people are invited to, or attend, a meeting with AI transcription and recording, there are at least five participants. Even in a meeting between two people. Each of these participants has certain obligations, and certain abilities. Each are addressed below.

1. The Passive Participant

The passive participant's data is being captured, often without their knowledge or meaningful consent. Options are limited but important.

What you can do:

If you are the Passive Participant in a virtual meeting, here are some steps you can take to protect yourself if you do not want your data including your voiceprint and image collected and used to train AI models:

Check the participant list for bot names before speaking. Look for names like 'Otter.ai,' 'Notetaker,' or unfamiliar participants in meetings where you expected a specific number of attendees.

Check the meeting application for indications of recording or transcription. Zoom, Teams, and other applications will have a visual indicator on screen, and many will have an audio prompt when joining.

Object immediately if you are uncomfortable with recording. State clearly: 'I do not consent to this meeting being recorded or transcribed by third-party AI tools.'

Ask directly: 'Is this meeting being recorded by any AI note-taking tools? Who has access to the recording?' This should force explicit disclosure or at least show that you cared who had access to what.

Leave the meeting if necessary. If recording continues without your consent and the content is sensitive, you are entitled to decline participation.

Document unauthorized recordings. Note the date, participants, bot name if visible, and lack of consent. This creates evidence for potential claims.

Limitation: This list is not foolproof. In large meetings (webinars, training sessions with hundreds of participants), identifying all bots is impractical. In these contexts, assume recording is occurring unless explicitly stated otherwise.

2. The Participant Who Brings a Notetaker Bot

If you bring an AI note-taker to a meeting, you have duties to all other participants and the meeting organizer, regardless of whether you are acting without any intent to cause harm or trouble, or whether you are acting more strategically.

What You Should Do:

Obtain permission from the meeting organizer before the meeting. Do not assume consent. The organizer may have policies, client obligations, or confidentiality concerns that prohibit AI recording.

Notify all participants you intend to include a bot before the meeting begins. Include notice in calendar invites: 'I will be using [Vendor Name] AI transcription for this meeting. This tool records audio, transcribes content, and [may/will not] be used to train AI models. If you object, please let me know before the meeting.'

Notify participants what happens to captured data. This requires you to actually know and understand your vendor's data practices. Do not assume—read the terms of service and privacy policy and do the research.

Name your bot clearly. Use 'Jane's Otter.ai AI Notetaker' or 'Notetaker Bot - John,' not anthropomorphized names like 'Emily Adams' that disguise the bot's nature.

Acknowledge bot use if asked. If another participant asks whether you are recording, answer honestly and immediately.

Disable the bot if requested. If any participant objects, turn off the bot immediately or leave the meeting.

3. The Meeting Organizer

As the Meeting Organizer you control the terms of engagement and bear the majority of responsibility for ensuring the meeting complies with organizational policies and legal requirements.

What You Must Do:

Establish and communicate recording policies. Decide whether AI note-takers are permitted, prohibited, or require advance approval. Communicate this policy when scheduling meetings.

Notify participants at meeting invitation and at meeting start. If you are recording, disclose this in the calendar invite and verbally at the start of the meeting. Identify the specific tool, who has access to recordings, and retention period.

Verify compliance with organizational standards. Confirm that planned recording complies with your organization's data governance, security, and privacy policies. For law firms, confirm compliance with ethics rules and client engagement terms. If you intend to record client meetings, this disclosure should be stated in your engagement letter. Get specific consent for the meeting to be recorded. Notify the client ahead of time how the data will be used not only by your firm but also by the notetaker app. These steps are the basis of informed consent in regard to meeting recording.

Monitor for unauthorized bots. Check the participant list for unexpected attendees or bot-like names. Challenge unidentified participants. Meeting organizers typically have the ability to expel an attendee from the meeting.

Stop unauthorized recording immediately. If you discover a participant has brought an unauthorized bot, give them the opportunity to disable it. If they fail to do so or fail to do it in a timely manner remove them from the meeting.

Document participant consent. Maintain records showing that participants were notified and consented, particularly for sensitive meetings (client consultations, M&A discussions, litigation strategy).

4. The Virtual Meeting Platform Providers

Most platforms provide basic recording notifications but they do not adequately distinguish between platform recording and third-party bot recording, nor do they give meeting organizers sufficient control over bot participation.

Meeting platform makers such as Zoom, Teams, and Google Meet are making an effort to provide transparency but could do better. Otter.ai is doing far less than it could to prevent unauthorized data capture. Meeting platforms are essentially the party hosts of the virtual meeting world. You would not expect to be invited to a private party, only to be ambushed by self-serving attendees or uninvited guests who were taking unauthorized images of you and recording your voice. Yet this is what meeting platforms are doing—they are allowing meeting attendee ‘guests’ to attend a meeting, capturing data and maintain it into such a persistent state that it cannot be meaningfully removed.

These virtual meeting platform creators have a disincentive to restrict AI bots since they likely benefit from captured data themselves. Both Zoom and Teams have weathered the storms of public scrutiny of how they are using the data they have gathered. It appears that the worst offender, Otter.ai, will be close behind them.

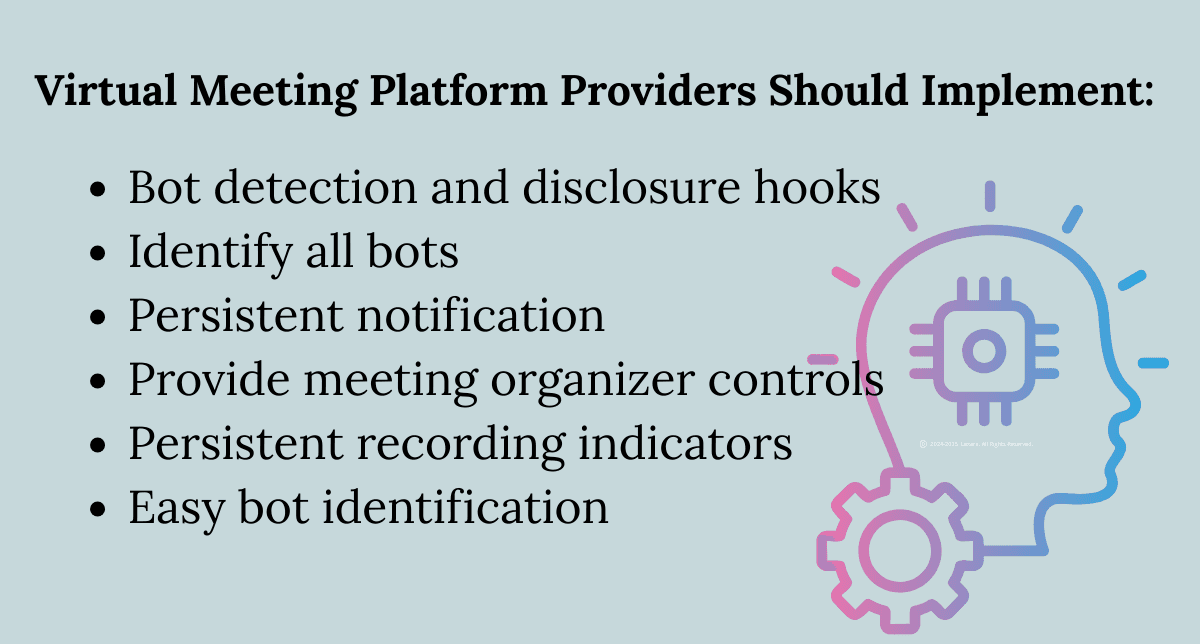

What Platforms Should Implement:

Virtual meeting platform providers should provide a far greater level of safety for those who are using their platforms.

Bot detection and disclosure hooks. The platform should include a hook to catch notetaker bots. The hook can automatically detect when a bot joins a meeting (based on API usage, participant behavior patterns, or declared bot status).

Identify all bots and hold them in an electronic waiting room until the bot has identified itself to all participants.

Persistent Notification. Notification that a bot is attending a meeting should never be ‘one and done.’ People join late, miss initial notices, are distracted, or just fail to process. Microsoft Teams and Zoom both have a persistent notice that the meeting is being recorded or transcribed, a “Notetaking Agents are participating in this meeting” notice would be easy for them to add. Notetaker bots should be required to maintain persistent notification.

Provide Meeting Organizer controls for bots. Provide organizers with the option to prohibit bots entirely, require advance approval for bots, or limit bot capabilities (e.g., allow transcription but prohibit screenshot capture).

Persistent recording indicators. All platforms should maintain a visible, persistent banner whenever any recording is occurring—whether by the platform, a participant, or a third-party bot. And these indicators must be clear and unavoidable. Common and reputable platforms already do this.

Easy bot identification. Bot makers should create distinctive icons—for example a robot symbol—for the bots they create. As an added layer of protection, the meeting platform should require 'Bot' or similar keyword in participant names.

5. The AI Note-Taker Vendors

Bot makers range from responsible vendors providing genuine productivity tools to companies engaged in systematic data extraction disguised as transcription services. The litigation in Parts 1 and 2 of this article demonstrates how the latter creates substantial legal exposure.

In our party analogy, the Notetaker Bots are the sometimes-uninvited guests that are walking around picking the pockets of the other party guests. However in the meeting context, there are greater harms than there tend to be at a party. Attendance at parties tends to be voluntary while attendance at meetings tends to be required.

Actions Responsible Bot Vendors Should Take:

Bot makers can provide transparent information on the use and training of their AI tools. And as a start, they can at the very least provide notification that the Bot is attending the meeting.

Automatic disclosure on bot joining. When the bot joins a meeting, automatically post a message in the meeting chat: '[Vendor Name] is recording this meeting. We capture [audio/video/screenshots]. Data is stored [location] and [will/will not] be used to train AI models. To object or for more information, visit [URL].'

Bot interrogation system. Allow meeting organizers and participants to query bots in real time: 'What data are you capturing? Where is it stored? Who has access? Will it be used for AI training?' And if it cannot satisfactorily answer these questions, it should be disallowed from the meeting. Most meeting platforms include a chat function that allows targeting or tagging a specific meeting participant. The Bot should accurately respond to chat messages or DMs requesting details on its capabilities.

Clear bot identification. Use a robot icon or similar visual indicator. Require 'Bot' or 'AI' in the participant name. Make bot nature obvious at a glance.

Default to no training. Do not use customer meeting data to train AI models unless the customer explicitly opts in. Make training opt-in, not opt-out or hidden in terms of service.

Provide granular controls. Allow customers to configure data retention periods, disable specific features (screenshots, voiceprints), and delete data on demand.

Comply with biometric laws. If generating voiceprints, comply with BIPA: obtain informed written consent, publish retention and deletion policies, do not sell or profit from biometric data.

AI Notetaker vendors are not likely to take these actions without significant legal or financial pressure. Companies like Otter.ai (as alleged in the consolidated complaint) that auto-join meetings, capture multi-modal data, generate voiceprints, and reserve rights to use data for model training exemplify the practices that create ECPA, CIPA, and BIPA exposure.

Best Practices for Lawyers Using AI Transcription Tools

1. All-Party Consent

Require all-party consent for every meeting where AI transcription is used. Do not rely on ECPA's one-party consent rule—it is inadequate when conversational data has commercial training value.

Pre-meeting notice: Include explicit disclosure in calendar invites identifying the AI tool, what data it captures, and whether data will be used for training.

In-meeting confirmation: Verbally confirm at meeting start that recording is occurring and provide opportunity to object.

Document consent: Maintain records (email confirmations, chat logs, meeting recordings) showing consent was obtained.

2. Vendor Due Diligence

Vendor selection is a risk management function, not just procurement. And although this is in doubt due to the Ninth Circuit’s decision in Popa, it is possible that a vendor's contractual right to use data is sufficient for liability under the capability test (see Part 2) These questions should inform your firm’s decision to employ a notetaker bot.

Critical Questions to Ask Vendors

Ask these questions of any vendor you’re considering. And in an abundance of caution, require written answers since policies and practices change quickly in AI-tool-land.

Does the vendor use meeting data to train AI models? If yes, is this opt-in or mandatory?

Where is data stored? Who can access it (vendor employees, subprocessors, affiliates)?

Does the tool generate voiceprints or other biometric identifiers? Can this be disabled?

Does the tool capture screenshots or video? Can this be disabled?

What indemnity does the vendor provide for wiretap, privacy, and biometric violations?

Can the vendor provide SOC 2, ISO 27001, or similar compliance certifications?

Red flag: Vendors unable or unwilling to answer these questions clearly should not be used for private or client communications.

Contract Terms

Your firm and clients will likely be very limited in negotiating for control of the terms of a built-in notetaker. You will likely only be able to negotiate the terms of Notetaker bot contracts in the enterprise context and with custom-built solutions. If you have an option, negotiate protective contract terms that limit vendor capability and rights. Here is what I suggest:

Explicit prohibition on training: 'Vendor shall not use Customer meeting data to train AI models or develop other products.'

Data minimization: 'Vendor shall collect only minimum necessary data. Customer may disable screenshots, voiceprints, and other optional features.'

Retention limits: 'Customer may configure retention periods. Vendor shall delete data upon expiration or Customer request.'

No third-party sharing: 'Vendor shall not share Customer data with affiliates or third parties without prior written consent.'

Uncapped indemnity: 'Vendor indemnifies Customer for ECPA, CIPA, and BIPA violations without liability cap.'

Operational Controls

Regardless of the notetaker, implement technical and operational safeguards:

Disable auto-join features. Require manual bot activation to ensure conscious consent.

Default to local recording when possible. Avoid sending data to third-party cloud servers.

Segregate by matter sensitivity. Prohibit AI transcription entirely for the most sensitive matters (litigation, M&A, internal investigations).

Train staff on consent requirements, how to identify bots, and incident response procedures.

Audit usage quarterly. Review vendor access logs and compliance with firm policies.

Guidance for Lawyers Advising AI Note-Taker Vendors

Reevaluate Deidentification Claims, and Abandon Weak Claims

If your client claims they 'only train on deidentified data,' strongly advise reconsideration. This defense is weak and potentially counterproductive. Conversational data resists meaningful deidentification—context reveals speakers even when names are removed. From a litigation perspective, weak deidentification policies can be worse than none because they demonstrate awareness of data sensitivity combined with inadequate protection.

Better approach: Do not use customer data for training at all. If training is essential to the business model, make it genuinely opt-in with clear disclosure of what is being collected and how it will be used.

Map the Data Supply Chain

Create a detailed data map for your client's product:

What is captured? (audio, video, screenshots, chat, metadata, voiceprints)

Where does it flow? (cloud provider, geographic location, subprocessors)

How long is it retained? (per-customer configuration, automatic deletion)

For what purposes? (transcription only vs. training vs. analytics)

The more the data map resembles a private data extraction pipeline (capturing multi-modal data, sending to centralized storage, retaining indefinitely, using for training), the easier it is for plaintiffs to frame intrusion and conversion claims.

Limit retention and reuse to what is reasonably necessary for the stated service. Put these limits in both internal policies and customer contracts.

Treat Biometrics as High-Risk

If your client's product generates voiceprints for speaker identification, treat this as a BIPA compliance requirement, not a feature decision.

BIPA requires:

informed written consent before collecting biometric data,

published written retention and deletion policy,

prohibition on selling or profiting from biometric identifiers,

private right of action with $1,000-$5,000 statutory damages per violation.

Make voiceprint generation opt-in, not default. Provide clear disclosure of how voiceprints are generated, stored, used, and deleted. Do not use voiceprints for model training. Implement automatic deletion when customer relationship terminates.

If your client cannot comply with BIPA, disable persistent voiceprint features entirely and use session-only speaker identification.

Design for Minimal Capability

Under CIPA's capability test, your client's technical architecture and contract terms create liability exposure. Design the product to minimize vendor capability to access or reuse customer data:

Process data locally on customer infrastructure when feasible (edge computing).

Use customer-managed encryption keys so vendor cannot access data without customer cooperation.

Segregate customer data in isolated environments—no commingling across customers.

Default to ephemeral processing (process in memory, deliver transcript, delete audio).

Design AI models to improve only within each customer's environment, not across all customers.

The goal is to make unauthorized data use technically difficult, not just contractually prohibited.

One-Page Guidance

Review the following checklist to share with your firm’s clients, and compliance or risk evaluation teams or feel free to use the checklist found on Lexara’s Resource page.

Quick Reference: Risk Mitigation Checklist

Use this checklist to assess and mitigate AI transcription risk.

For Every Meeting With AI Recording

☐ All participants notified before meeting (calendar invite disclosure)

☐ Verbal confirmation at meeting start, with an opportunity to object

☐ Bot clearly identified (name includes 'Bot' or 'Notetaker')

☐ Persistent visual indicator active during recording

☐ Consent documented (email, chat log, or recording itself)

☐ Participants know what happens to data (local storage only vs. cloud/training)

When Selecting an AI Transcription Vendor

☐ Vendor provides written answers to data use questions

☐ Training is opt-in or can be disabled entirely

☐ Voiceprint generation can be disabled

☐ Screenshot capture can be disabled

☐ Data retention is customer-configurable

☐ Vendor provides SOC 2 or equivalent certification

☐ Vendor offers uncapped indemnity for privacy/wiretap violations

During Vendor Contract Negotiations

☐ Explicit prohibition on using customer data for training

☐ Data minimization obligation (minimum necessary data only)

☐ No third-party sharing without prior written consent

☐ Customer can configure retention periods and request deletion

☐ Vendor must encrypt data in transit and at rest

☐ Breach notification within 24 hours

☐ Indemnity for ECPA/CIPA/BIPA violations survives contract termination

For Law Firm Operational Controls

☐ Written AI transcription policy established

☐ Matters classified by sensitivity (prohibit AI for most sensitive)

☐ Auto-join features disabled (manual activation required)

☐ Default to local recording when possible

☐ Staff trained on consent requirements and bot identification

☐ Quarterly audits of AI tool usage and vendor access logs

☐ Incident response procedures for unauthorized recordings

Red Flags - Do Not Use This Vendor If:

✗ Vendor cannot or will not answer data use questions in writing

✗ Terms of service include 'may use data to improve services' without opt-out

✗ Voiceprints are generated by default and cannot be disabled

✗ Data retention is indefinite with no customer control

✗ Vendor refuses to provide compliance certifications

✗ Indemnity is capped below statutory damages exposure

✗ Vendor reserves right to share data with 'partners' or affiliates

Conclusion

Each party in the AI transcription space—passive participants, bot users, meeting organizers, platform makers, and bot creators—has a role in reducing risk. For lawyers, the imperative is clear: implement all-party consent, conduct rigorous vendor due diligence, negotiate protective contracts, and establish operational safeguards. For those advising AI vendors, the guidance is equally direct: design products to minimize capability, abandon weak deidentification claims, and treat biometrics as high-risk regulated data.

Approach AI transcription in the mindset of the party host context – would a party guest expect this type of treatment? Using informed risk management can help your clients realize productivity benefits while minimizing legal exposure. Alternatively, clients that deploy these tools without attention to consent, vendor contracts, and operational controls may find themselves defending class actions under statutes with statutory damages that can generate crippling exposure when multiplied across thousands of recorded conversations.

© 2026 Amy Swaner. All Rights Reserved. May use with attribution and link to article.

More Like This

AI Note-Takers, Wiretap Laws, and the Next Wave of Privacy Class Actions

Part 3: AI note-takers deliver real efficiency gains, but when vendors retain or reuse conversational data, they expose every meeting participant to escalating wiretap, privacy, and biometric liability.

Part 2: AI Note-Takers, Wiretap Laws, and the Next Wave of Privacy Class Actions

The same features that make AI note-takers powerful—centralized processing, speaker identification, and “product improvement” licenses—are now forming the backbone of a coordinated wave of privacy class actions.

Part 1: AI Note-Takers, Wiretap Laws, and the Next Wave of Privacy Class Actions

AI note-taker bots quietly transform private meetings and customer calls into high-value training data, turning everyday workplace infrastructure into potential electronic eavesdroppers and setting the stage for a new wave of wiretap, privacy, and biometric class actions.